In the User Research for UX course at UC Berkeley Extension, I had the opportunity to do hands-on research in a mobile app category of our choice. I chose to explore user insights about mobile cooking apps.

I conducted three types of research methods:

My overall goals for my user research assignments were to find out:

I chose the following to two cooking apps for testing:

Yummly (in-person interviews). Yummly provides recipe recommendations personalized to the individual’s tastes, semantic recipe search, a digital recipe box, shopping list and one-hour grocery delivery.

Allrecipes (remote user testing and online survey). Allrecipes is the original and largest food-focused social network created for cooks by cooks; where everyone plays a part in helping cooks discover and share the joy of home cooking.

We created a project plan for structured one-on-one interviews that summarized the who, what, when, where, and why of the testing.

I interviewed three people. I sought to find out:

I interviewed three people who were the primary cooks in their household. I was interested in finding out if they had adopted this relatively new technology to help with meal preparation. I also asked them some specific questions about the usability of the Yummly app.

I attempted not to ask the following:

I found out that all three respondents weren’t very interested in using an iPhone app to help with meal preparation and each had some difficulty with some of Yummly’s key features, such as filtering ingredients by preferences and saving a recipe to be viewed later.

The test data was not as important, however, as what I learned that could make it better for next time:

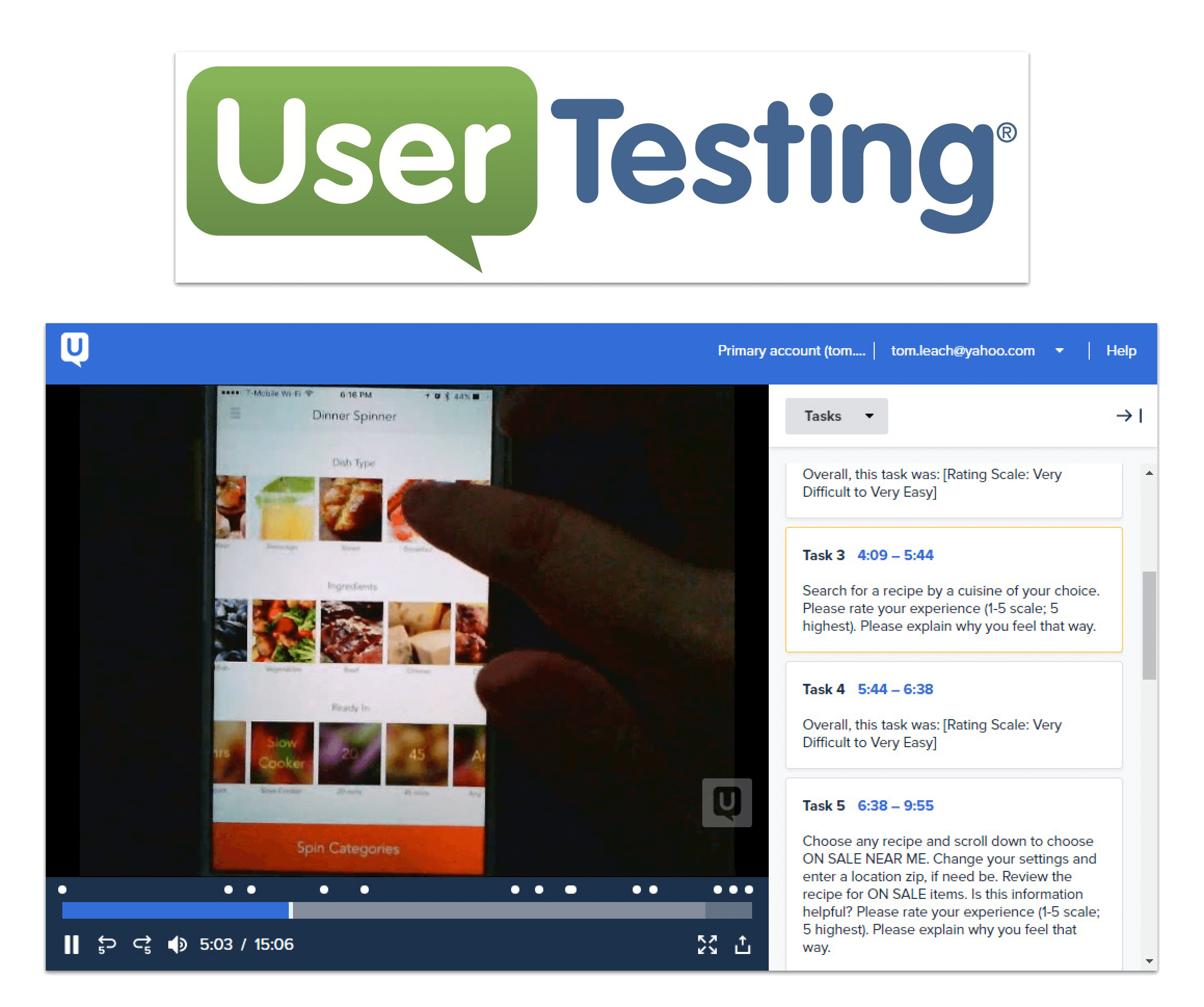

My second test was a remote, unmoderated usability test using the cooking app Allrecipes. The testing service used was User Testing at www.usertesting.com.

This time I devised roughly ten questions that asked for quantitative and qualitative responses. Testers were asked to rate on a 1-5 scale (5 highest) their experience with a particular feature and explain why they felt this way.

I submitted my task list questions to User Testing and soon received an email that three respondents had completed the test. I had the opportunity to watch and listen as they worked through my questions. They all did a great job articulating their actions and opinions about certain features.

Being able to listen to the respondents as they worked through the questions added a lot of qualitative feedback. I could hear the frustration when they had difficulty. One respondent was an avid user of the Allrecipes web site, which I believe, led her to overrate some features despite not understanding some functionality.

How could the experience be better?

For the last user research test, we were asked to use Google Forms to create a 15-question survey that respondents can easily respond to via a link.

Rather than use classmates and friends as respondents, I requested workers through the Amazon Mechanical Turk service. This enabled me to tap into a large pool of respondents (92) and get fast results - within 30 minutes!

This study helped to answer my first two questions:

I received 92 responses to the survey. I learned that this group was more enthusiastic about using an app for meal preparation as compared to my other studies.

Some highly requested features:

How could this study be better? Screening was cost-prohibitive on Amazon Mechanical Turk. Using questions to gather some information about the respondents would have made the data more meaningful.